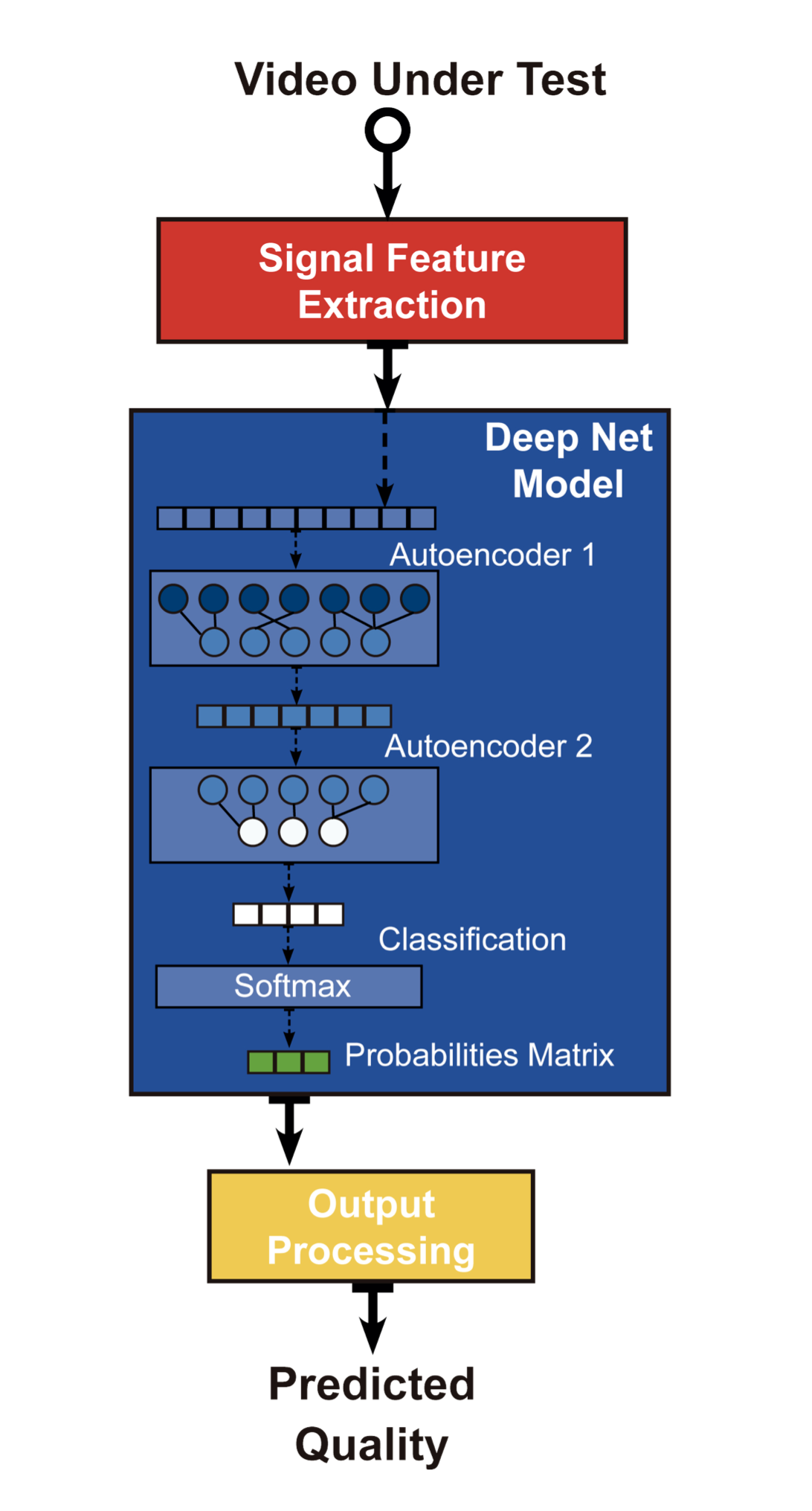

Welcome and congratulations on becoming Postdoctoral Research Associate at QxLab. Helard joined QxLab from Universidade de Brasília where his PhD research into multimodal QoE models led to the development of an audiovisual model for predicting Quality of Experience using data-driven neural networks and autoencoders. His current research interests include audio-visual signals, quality metrics, multimedia processing and machine learning.

Welcome and congratulations on becoming Postdoctoral Research Associate at QxLab. Helard joined QxLab from Universidade de Brasília where his PhD research into multimodal QoE models led to the development of an audiovisual model for predicting Quality of Experience using data-driven neural networks and autoencoders. His current research interests include audio-visual signals, quality metrics, multimedia processing and machine learning.

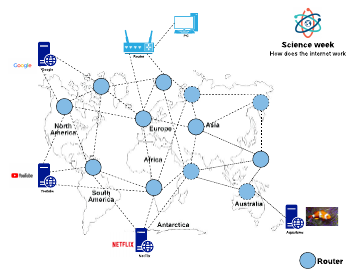

Science week in Ireland: Hamed explained how the Internet works for 3rd class primary school

Last week it was science week in Ireland. As part of our communication and outreach engagement, Hamed visited a local primary school to explain how the internet works to a class of eight year old students.

Last week it was science week in Ireland. As part of our communication and outreach engagement, Hamed visited a local primary school to explain how the internet works to a class of eight year old students.

He started by asking the question: “Who knows how a postal package gets delivered?”

He used this example to illustrate the role of routers in computer networks. Once the students understood how routers operate in the networks, they were able to apply their knowledge to an exciting game. Hamed used the game to illustate convergence as kids exchanged paper notes with the location of servers. They also simulated packet forwarding by sending a request from a user to the server and vice versa.

“Sound is the vocabulary of our world” @ the Abbey Road Hackathon

Pheobe participated in the Abbey Road Music Tech Hackathon winning two awards for Best example of spatial audio and the Best use of Audio Commons award.

Pheobe participated in the Abbey Road Music Tech Hackathon winning two awards for Best example of spatial audio and the Best use of Audio Commons award.

Her team, Hear The World built a platform that sonifies the physical world allowing those who cannot see to feel the world by leveraging spatial sound techniques.

Well done Pheobe and the Hear the World team for delivering a novel audio quality of experience!

The Hackathon, taking place over 9-10 November, has had QxLab members participating for the last two years with with Alessandro joining the action in 2018.

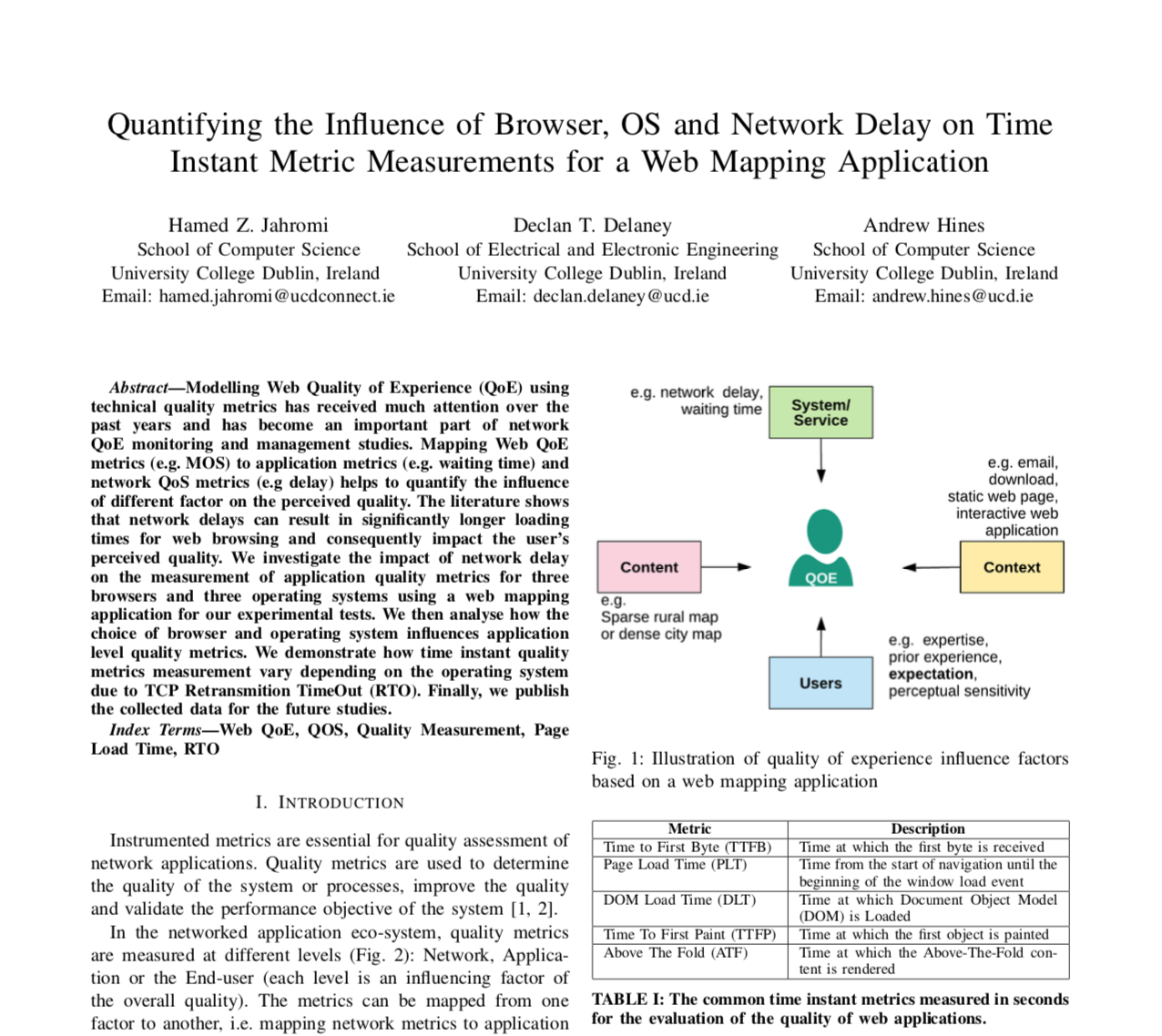

How Do Different Browsers Handle Network Delays?

Hamed is in China at the IEEE International Conference on Communication Technology (ICCT) where he will present his work “Quantifying the Influence of Browser, OS and Network Delay on Time Instant Metric Measurements for a Web Mapping Application”.

Many factors influence the quality of experience when using interactive web-based applications such as Google Maps. In this work, Hamed explores the influence of operating system and browser on network delays.

QxLab Grows with Two New Grants for 5G and Speech Research

Dr Wissam Jassim and Dr Alcardo (Alex) Barakabitze joined QxLab in October.

Wissam is a research fellow working on GAN codec quality prediction. His research is funded from a new grant awarded to QxLab by Google. He will be working closely with Google Chrome to develop a new speech quality model that works with generative speech codecs.

Wissam is a research fellow working on GAN codec quality prediction. His research is funded from a new grant awarded to QxLab by Google. He will be working closely with Google Chrome to develop a new speech quality model that works with generative speech codecs.

Alex is an IRC Government of Ireland Postdoctoral Fellow developing QoE centric management of multimedia services and orchestration of resources in 5G networks using SDN and NFV.

Alex is an IRC Government of Ireland Postdoctoral Fellow developing QoE centric management of multimedia services and orchestration of resources in 5G networks using SDN and NFV.

Wissam and Alex will be based in the Insight Centre at UCD.

Alessandro at the Turing

Alessandro has temporarily relocated to London where he will be based at the Alan Turing Institute as part of an Turing-Insight research collaboration. The Enrichment programme sees a cohort of 54 students based at the Turing in London for 2019/20.

Spending time in London will give Alessandro access to the facilities of the Turing, based in the British Library. He will also have the opportunity to visit Queen Mary University of London where his co-supervisor Emmanouil Benetos is based.

While in London, Alessandro will continue his PhD research using deep learning models to predict quality for audio archives.

NAVE: An Autoencoder-based Video Quality Metric

This month our latest research will be presented at the 2019 IEEE International Conference on Image Processing (ICIP) in Taipei, Taiwan. This is the premier event for image and video processing featuring international researchers and experts in this field.

We will present a Video Quality Metric that uses a deep autoencoder to train a model for video quality prediction. The presentation will be on Tuesday, 24 September at noon as part of a wider session on Novel Approaches for Image & Video Quality Assessment.

My paper, along with research by accepted authors, is currently available as a free download on IEEE Xplore until 25 September 2019. I hope you’ll download it and share any insights, feedback, and questions with me.

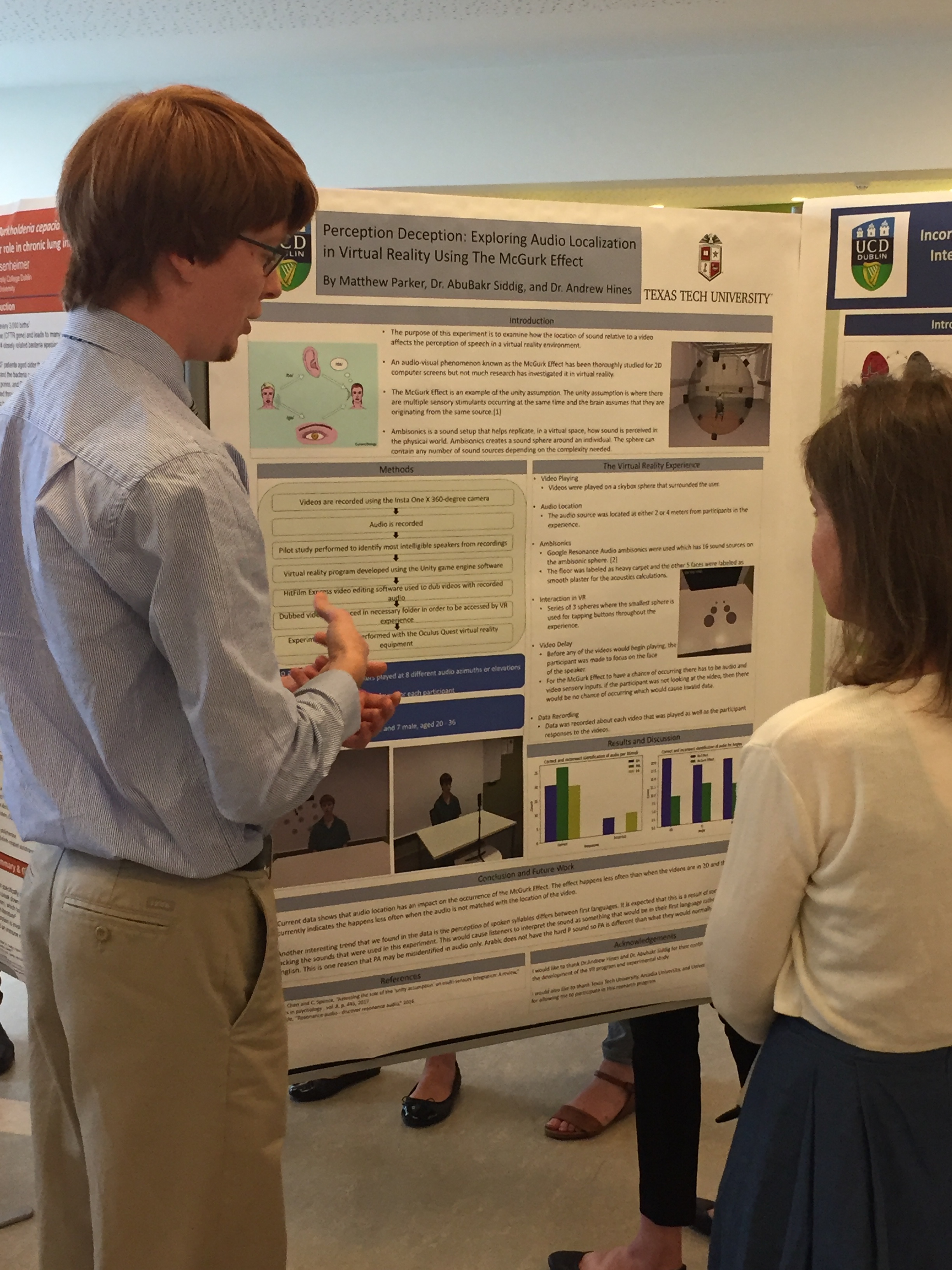

3D Sound and Vision with the Occulus Quest

Matthew Parker from Texas Tech University spent the summer at QxLab hosted by Insight and the school of Computer Science. Over eight weeks, he developed a virtual reality environment using the newest generation headset, the Oculus Quest wireless VR headset to explore audio-visual fusion.

The audio and video immersion provided by virtual reality headsets is what makes virtual reality so enticing. Better understanding of this audio and video immersion is needed particularly in streaming applications where bandwidth is limited. The full 360 degree spaces that are the trademark of virtual reality require copious amounts of data to produce at a high quality. His work examined audio localization as a possible candidate for data compression by utilizing an audio-video phenomenon known as the McGurk Effect. The McGurk Effect occurs when mismatched audio and video stimuli are experienced by someone and the resulting perception of the sound is different than either of the stimuli. The common example for this phenomenon is a video of a talker saying /ga/ dubbed with the audio of someone saying /ba/. This usually results in the perception of /da/.

He used Ambisonics for audio, a type of audio that can be used over headphones to mimic how humans naturally hear sounds using head related transfer functions and acoustic environments.

Seeking words of Wisdom

QxLab took a couple of days away from our desks for a co-located writing workshop. Basing ourselves in one of UCD’s new University Club meeting rooms, we spent two days working on our research writing. A starter session reflected on writing style and discussed where to publish. We practiced with some free writing and structured writing exercises and reviewed Brown’s 8 questions as a set of prompts.

- Who are intended readers? (3-5 names)

- What did you do? (50 words)

- Why did you do it? (50 words)

- What happened? (50 words)

- What do results mean in theory? (50 words)

- What do results mean in practice? (50 words)

- What is the key benefit for readers (25 words)

- What remains unresolved? (no word limit)

These questions, originally devised by Robert Brown but popularized by Rowena Murray, are a great way to get a writing retreat going. The rest of the sessions were spent progressing our writing towards our personal writing objectives – a bit like a natural language “Hackathon”.

For the final session we chose a short piece of own own writing and shared them for a non-judgemental peer review session where the author could choose the scope for their own feedback. A lot of the feedback followed common themes as we fell into similar traps with our writing.

A recent twitter thread offered a lot of advice we could relate to and a key take home message was to remember was that when you read published papers you only see the finished article. The papers you read have been through countless iterations and review feedback sessions from co-authors, reviewers and copy editors. Don’t compare your first draft to a published paper!

Useful references

Murray, R (2005) Writing for Academic Journals. Maidenhead: Open University Press-McGraw-Hill.

Murray, R & Moore, S (2006) The handbook of academic writing: A fresh approach. Maidenhead: Open University Press-McGraw-Hill.

Fusion Confusion in Massachusetts

Today QxLab’s Dr Abubakr Siddig presented collaborative work on immersive multimedia. As part of the ACM MMSys conference in University of Massachusetts Amherst Campus, the International Workshop on IMmersive Mixed and Virtual Environment Systems, MMVE 2019 is celebrating its 11th edition.

The paper, Fusion Confusion: Exploring Ambisonic Spatial Localisation for Audio-Visual Immersion Using the McGurk Effect, looked at the relationship between visual cues and spatial localisation for speech sounds.

The paper found that the McGurk Effect, where visual cues for sounds override what you hear, occurs for spatial audio but is not sensitive to whether the speech sound is aligned in space with the lips of the speaker.

The research, carried out by QxLab’s UCD based researchers and funded by two SFI centre’s CONNECT and INSIGHT.

Well done to AbuBakr, the presentation and demo were well received by the workshop attendees.