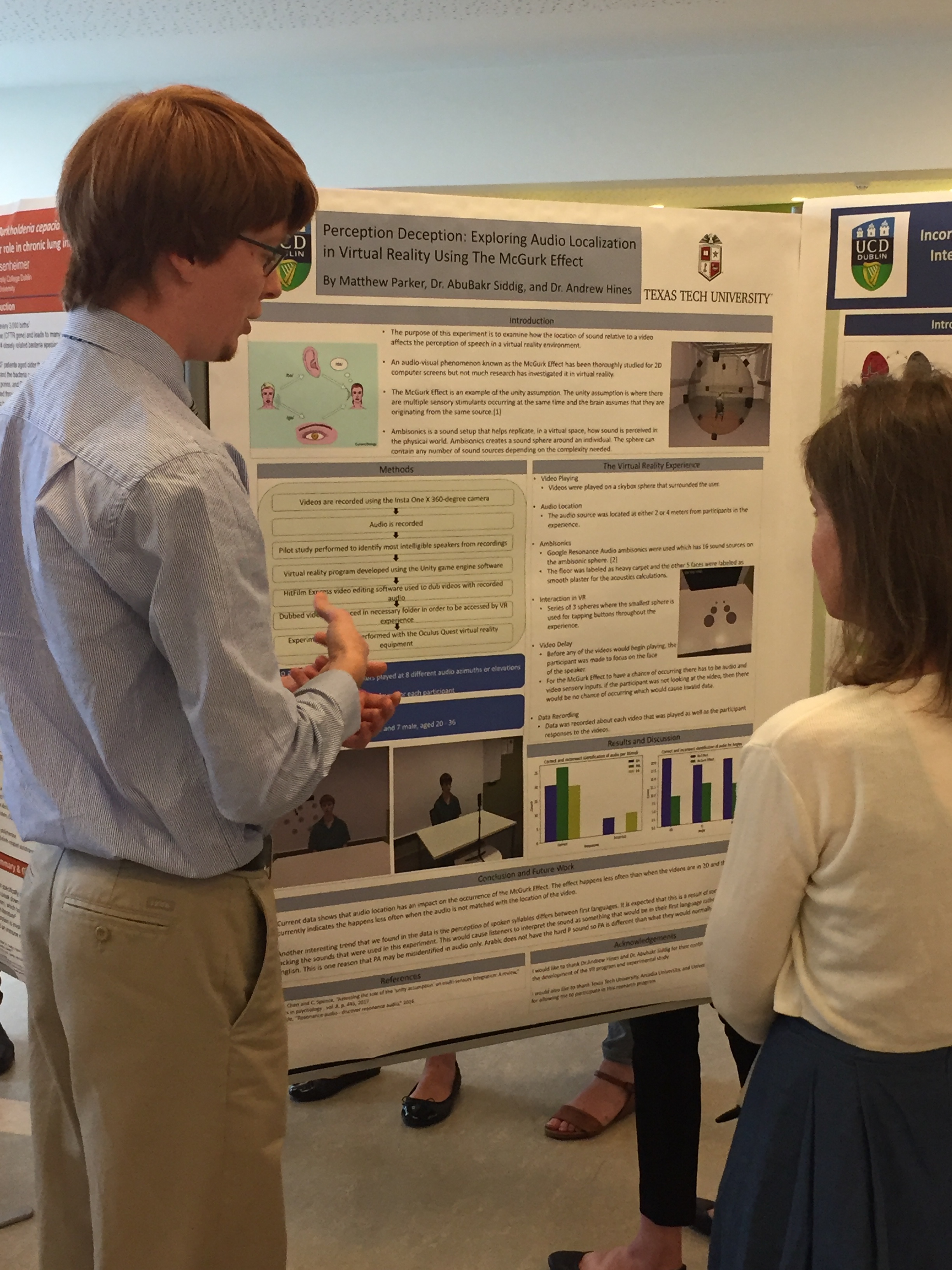

Matthew Parker from Texas Tech University spent the summer at QxLab hosted by Insight and the school of Computer Science. Over eight weeks, he developed a virtual reality environment using the newest generation headset, the Oculus Quest wireless VR headset to explore audio-visual fusion.

The audio and video immersion provided by virtual reality headsets is what makes virtual reality so enticing. Better understanding of this audio and video immersion is needed particularly in streaming applications where bandwidth is limited. The full 360 degree spaces that are the trademark of virtual reality require copious amounts of data to produce at a high quality. His work examined audio localization as a possible candidate for data compression by utilizing an audio-video phenomenon known as the McGurk Effect. The McGurk Effect occurs when mismatched audio and video stimuli are experienced by someone and the resulting perception of the sound is different than either of the stimuli. The common example for this phenomenon is a video of a talker saying /ga/ dubbed with the audio of someone saying /ba/. This usually results in the perception of /da/.

He used Ambisonics for audio, a type of audio that can be used over headphones to mimic how humans naturally hear sounds using head related transfer functions and acoustic environments.