Today is the UNESCO World Archives Day, highlighting the important work of archives and archivists in preserving our cultural heritage. The date was chosen to commemorate the creation of the International Council on Archives (ICA) founded on 9th of June 1948 under the auspices of the UNESCO. According to the ICA, “[a]rchives represent an unparalleled wealth. They are the documentary product of human activity and as such constitute irreplaceable testimonies of past events. They ensure the democratic functioning of societies, the identity of individuals and communities and the defense of human rights.”

Following quickly after the 6th June D-Day commemorations, today is a good day to highlight the important work that has been taking place to digitise and preserve the audio archives of the Nuremberg trials. Witnesses, lawyers and judges were recorded in their native tongues together with recordings of the live translations. This resulted in 775 hours of original trial audio recorded on 1,942 Presto gramophone discs and translations on Embossed tape, a clear-colored film also known as Amertape. While the tape degraded, the discs survived. The digitisation will be published next year but the fascinating story of was recently published by the Verge and PRI articles by Christopher Harland-Dunaway. University of Fribourg’s Ottar Johnsen worked with Stefano Cavaglieri, a colleague at the Swiss National Sound Archives and the International Court of Justices archivists using imaging and audio digital signal processing to capture the archive material. You can listen to it here:

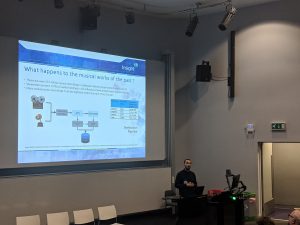

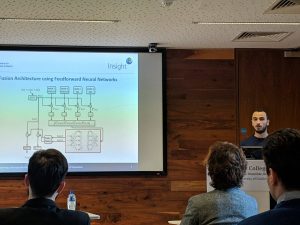

Last week, at the 11th International Conference on Quality of Multimedia Experience (QoMEX), QxLab PhD student Alessandro Ragano presented our work on how audio archive stakeholders perceive quality in archive material. By examining the lifecycle from digitisation through restoration and consumption, the influence factors and stakeholders are highlighted. At QxLab we are interested in how audio digital signal processing techniques can be used in conjunction with data driven machine learning to capture, enhance and explore audio archives.

Last week, at the 11th International Conference on Quality of Multimedia Experience (QoMEX), QxLab PhD student Alessandro Ragano presented our work on how audio archive stakeholders perceive quality in archive material. By examining the lifecycle from digitisation through restoration and consumption, the influence factors and stakeholders are highlighted. At QxLab we are interested in how audio digital signal processing techniques can be used in conjunction with data driven machine learning to capture, enhance and explore audio archives.

Alessandro’s research is supported in part by a research grant from Science Foundation Ireland (SFI) and is co-founded under the European Regional Development Fund under Grant No. 17/RC-PhD/3483. This publication has emanated from research supported by Insight which is supported by SFI under grant number 12/RC/2289. EB is supported by RAEng Research Fellowship RF/128 and a Turing Fellowship.

QxLab has two papers at the Irish Signals and Systems Conference in Maynooth University today. MSc student, Tong Mo presented work of speech Quality of Experience. Her research investigated how computer models for speech quality prediction in systems such as Skype or Google Hangouts. She developed an algorithm to minimise errors in the presence of jitter buffers.

QxLab has two papers at the Irish Signals and Systems Conference in Maynooth University today. MSc student, Tong Mo presented work of speech Quality of Experience. Her research investigated how computer models for speech quality prediction in systems such as Skype or Google Hangouts. She developed an algorithm to minimise errors in the presence of jitter buffers.

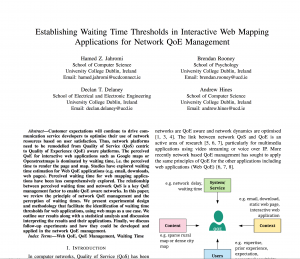

A second paper was presented by PhD candidate Hamed Jahromi entitled, “Establishing Waiting Time Thresholds in Interactive Web Mapping Applications for Network QoE Management.” Hamed’s work looked at the perception of time in web applications. Is an additional delay of half a second noticeable if you have already waited 5 seconds for a Google Map page to load? Time is not absolute and Hamed wants to understand the impact of delays on web applications in order to optimise network resources for interactive applications other than speech and video streaming. This work was co-authored with Delcan T. Delaney from UCD Engineering and Brendan Rooney from UCD Psychology.

A second paper was presented by PhD candidate Hamed Jahromi entitled, “Establishing Waiting Time Thresholds in Interactive Web Mapping Applications for Network QoE Management.” Hamed’s work looked at the perception of time in web applications. Is an additional delay of half a second noticeable if you have already waited 5 seconds for a Google Map page to load? Time is not absolute and Hamed wants to understand the impact of delays on web applications in order to optimise network resources for interactive applications other than speech and video streaming. This work was co-authored with Delcan T. Delaney from UCD Engineering and Brendan Rooney from UCD Psychology.

Last week, at the 11th International Conference on Quality of Multimedia Experience (QoMEX), QxLab PhD student Alessandro Ragano presented our

Last week, at the 11th International Conference on Quality of Multimedia Experience (QoMEX), QxLab PhD student Alessandro Ragano presented our

QxLab is participating in two

QxLab is participating in two  roller-coaster” compressed into several hours. I accepted the request without reflection (other than a “sure, that needs no preparations…”) and then panicked that my PhD experiences were stale and possibly no-longer relevant. Then I reflected that I wasn’t being asked to advice through the lens of a student, the actual question was what advice could I offer as someone who as experienced both sides of the student-advisor relationship. Getting the research question right was an important first step. Next I read a few other blogs, papers and tweets. There is already a large body of work in the area of PhD advice so I decided to skip the exhaustive literature review and to provide a case study style approach focusing purely on my own experience.

roller-coaster” compressed into several hours. I accepted the request without reflection (other than a “sure, that needs no preparations…”) and then panicked that my PhD experiences were stale and possibly no-longer relevant. Then I reflected that I wasn’t being asked to advice through the lens of a student, the actual question was what advice could I offer as someone who as experienced both sides of the student-advisor relationship. Getting the research question right was an important first step. Next I read a few other blogs, papers and tweets. There is already a large body of work in the area of PhD advice so I decided to skip the exhaustive literature review and to provide a case study style approach focusing purely on my own experience. I was back at the RDS in Dublin visiting the BT Young Scientist and Technology Exhibition. Beginning in 1963, the exhibition concept was created by to UCD academics from the School of Physics. Fast forward to 30 years ago and I participated for the first of two visits. Arriving in again to visit thirty years later, I was struck by the professional finish of posters. So much has improved, but I still love the hand made stands and eye catching props to lure you into a project. As you can see from the newspaper clipping, our project may have involved a lot of hot air but I recall there was some scientific rigour to our methodology!

I was back at the RDS in Dublin visiting the BT Young Scientist and Technology Exhibition. Beginning in 1963, the exhibition concept was created by to UCD academics from the School of Physics. Fast forward to 30 years ago and I participated for the first of two visits. Arriving in again to visit thirty years later, I was struck by the professional finish of posters. So much has improved, but I still love the hand made stands and eye catching props to lure you into a project. As you can see from the newspaper clipping, our project may have involved a lot of hot air but I recall there was some scientific rigour to our methodology!

I met 2019 winner of the BT Young Scientist and Technology Exhibition, Adam Kelly, while judging the national finals of SciFest 2018 where he also won first prize. As a judge I was struck by his demonstration of all the attributes of a quality scientist: imagination, methodology and a great ability to communicate the work. He knew what he had done and was able to explain what he had not done, and why. Adam’s project for SciFest was entitled ‘An Open Source Solution to Simulating Quantum Computers Using Hardware Acceleration’ and was the overall winner out of more than 10,000 students competed in the regional heats to progress to the national SciFest 2018 final.

I met 2019 winner of the BT Young Scientist and Technology Exhibition, Adam Kelly, while judging the national finals of SciFest 2018 where he also won first prize. As a judge I was struck by his demonstration of all the attributes of a quality scientist: imagination, methodology and a great ability to communicate the work. He knew what he had done and was able to explain what he had not done, and why. Adam’s project for SciFest was entitled ‘An Open Source Solution to Simulating Quantum Computers Using Hardware Acceleration’ and was the overall winner out of more than 10,000 students competed in the regional heats to progress to the national SciFest 2018 final.

QxLab was well represented with two papers: Rahul, who is funded by a scholarship from the SFI

QxLab was well represented with two papers: Rahul, who is funded by a scholarship from the SFI  Alessandro also had a

Alessandro also had a  QxLab’s Alessandro Ragano was in London’s Abbey Road Studio with his team ‘the Tailors’. The ‘Tailors’ helped find a way to provide an artificial companion for singers and songwriters. Taking lyrics and sentiment using Microsoft’s Cognitive APIs.

QxLab’s Alessandro Ragano was in London’s Abbey Road Studio with his team ‘the Tailors’. The ‘Tailors’ helped find a way to provide an artificial companion for singers and songwriters. Taking lyrics and sentiment using Microsoft’s Cognitive APIs. The SFI CONNECT research centre for future networks project has funded the recruitment of a new postdoctoral research fellow at QxLab. Dr AbuBakr Siddig completed a PhD at University College Dublin and an MSc in Blekinge Institute of Technology, Sweden. He brings experience in digital signal processing and 802.11 wireless networking to the group. Welcome AbuBakr!

The SFI CONNECT research centre for future networks project has funded the recruitment of a new postdoctoral research fellow at QxLab. Dr AbuBakr Siddig completed a PhD at University College Dublin and an MSc in Blekinge Institute of Technology, Sweden. He brings experience in digital signal processing and 802.11 wireless networking to the group. Welcome AbuBakr!