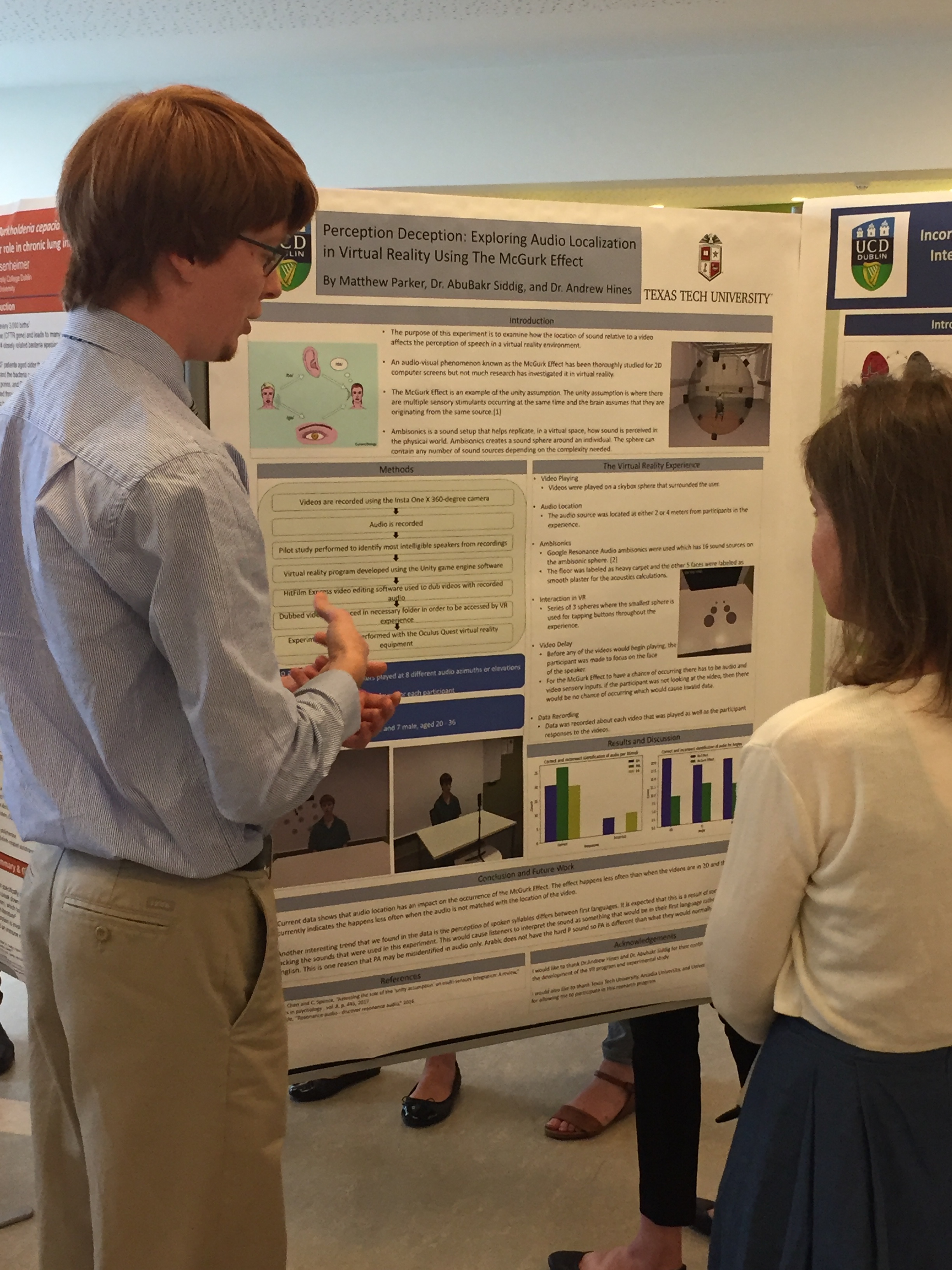

QxLab took a couple of days away from our desks for a co-located writing workshop. Basing ourselves in one of UCD’s new University Club meeting rooms, we spent two days working on our research writing. A starter session reflected on writing style and discussed where to publish. We practiced with some free writing and structured writing exercises and reviewed Brown’s 8 questions as a set of prompts.

- Who are intended readers? (3-5 names)

- What did you do? (50 words)

- Why did you do it? (50 words)

- What happened? (50 words)

- What do results mean in theory? (50 words)

- What do results mean in practice? (50 words)

- What is the key benefit for readers (25 words)

- What remains unresolved? (no word limit)

These questions, originally devised by Robert Brown but popularized by Rowena Murray, are a great way to get a writing retreat going. The rest of the sessions were spent progressing our writing towards our personal writing objectives – a bit like a natural language “Hackathon”.

For the final session we chose a short piece of own own writing and shared them for a non-judgemental peer review session where the author could choose the scope for their own feedback. A lot of the feedback followed common themes as we fell into similar traps with our writing.

A recent twitter thread offered a lot of advice we could relate to and a key take home message was to remember was that when you read published papers you only see the finished article. The papers you read have been through countless iterations and review feedback sessions from co-authors, reviewers and copy editors. Don’t compare your first draft to a published paper!

Useful references

Murray, R (2005) Writing for Academic Journals. Maidenhead: Open University Press-McGraw-Hill.

Murray, R & Moore, S (2006) The handbook of academic writing: A fresh approach. Maidenhead: Open University Press-McGraw-Hill.

Pheobe participated in the Abbey Road Music Tech Hackathon winning two awards for Best example of spatial audio and the Best use of Audio Commons award.

Pheobe participated in the Abbey Road Music Tech Hackathon winning two awards for Best example of spatial audio and the Best use of Audio Commons award.

Wissam is a research fellow working on GAN codec quality prediction. His research is funded from a new grant awarded to QxLab by Google. He will be working closely with Google Chrome to develop a new speech quality model that works with generative speech codecs.

Wissam is a research fellow working on GAN codec quality prediction. His research is funded from a new grant awarded to QxLab by Google. He will be working closely with Google Chrome to develop a new speech quality model that works with generative speech codecs. Alex is an IRC Government of Ireland Postdoctoral Fellow developing QoE centric management of multimedia services and orchestration of resources in 5G networks using SDN and NFV.

Alex is an IRC Government of Ireland Postdoctoral Fellow developing QoE centric management of multimedia services and orchestration of resources in 5G networks using SDN and NFV.

QxLab has two papers at the

QxLab has two papers at the

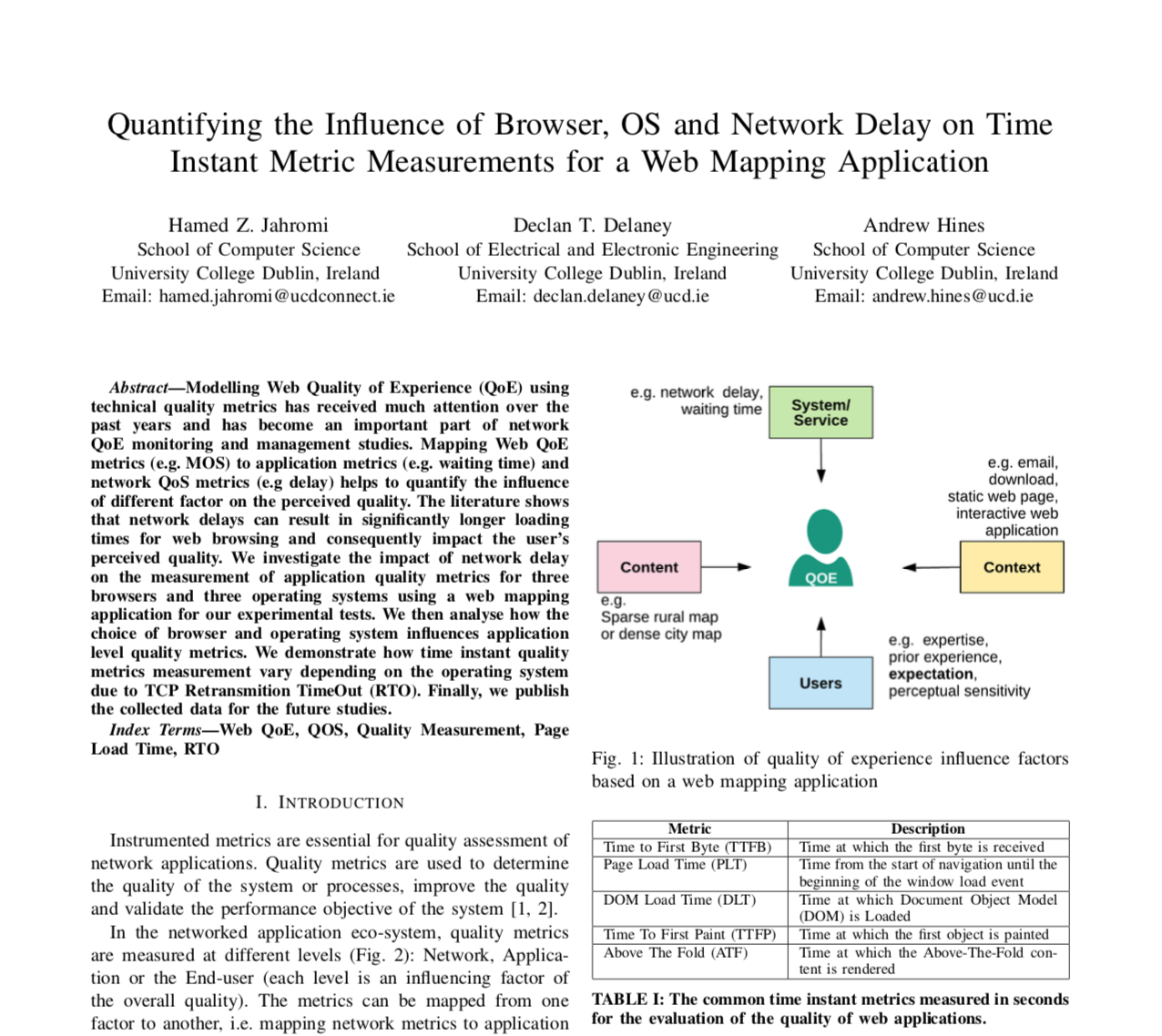

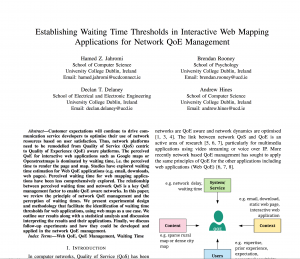

A second paper was presented by PhD candidate Hamed Jahromi entitled, “Establishing Waiting Time Thresholds in Interactive Web Mapping Applications for Network QoE Management.” Hamed’s work looked at the perception of time in web applications. Is an additional delay of half a second noticeable if you have already waited 5 seconds for a Google Map page to load? Time is not absolute and Hamed wants to understand the impact of delays on web applications in order to optimise network resources for interactive applications other than speech and video streaming. This work was co-authored with Delcan T. Delaney from UCD Engineering and Brendan Rooney from UCD Psychology.

A second paper was presented by PhD candidate Hamed Jahromi entitled, “Establishing Waiting Time Thresholds in Interactive Web Mapping Applications for Network QoE Management.” Hamed’s work looked at the perception of time in web applications. Is an additional delay of half a second noticeable if you have already waited 5 seconds for a Google Map page to load? Time is not absolute and Hamed wants to understand the impact of delays on web applications in order to optimise network resources for interactive applications other than speech and video streaming. This work was co-authored with Delcan T. Delaney from UCD Engineering and Brendan Rooney from UCD Psychology.

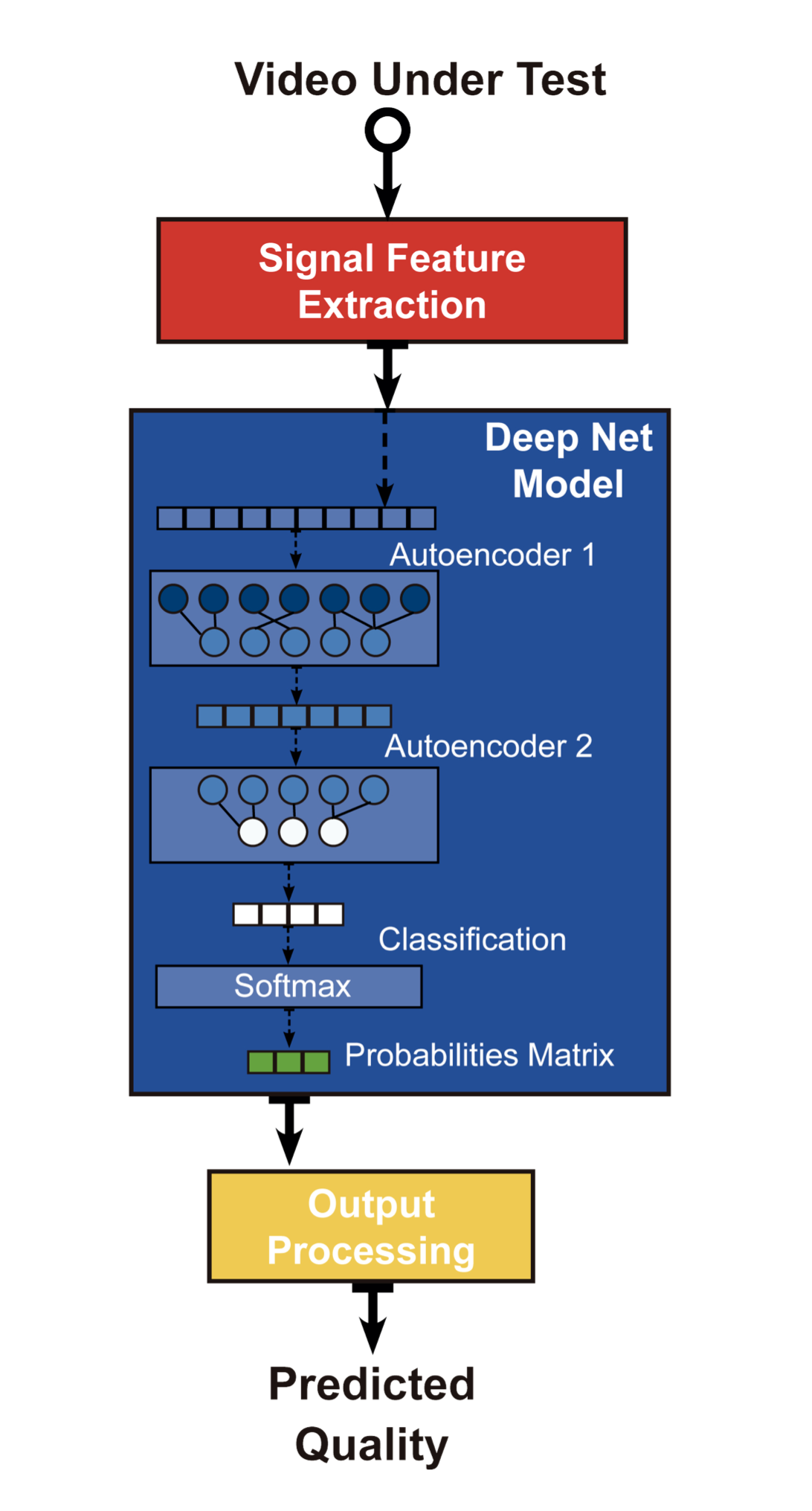

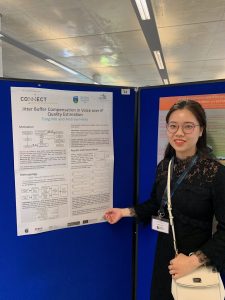

Last week, at the 11th International Conference on Quality of Multimedia Experience (QoMEX), QxLab PhD student Alessandro Ragano presented our

Last week, at the 11th International Conference on Quality of Multimedia Experience (QoMEX), QxLab PhD student Alessandro Ragano presented our