The 26th Irish Conference on Artificial Intelligence and Cognitive Science was hosted by the Trinity College Dublin on the 6th and 7th of December in the Long Room Hub. The conference covered an interesting variety of topics across computer science, psychology and neuroscience. This led to interesting presentations including one asking the question whether Amazon’s Alexa suffers from Alexithymia?

QxLab was well represented with two papers: Rahul, who is funded by a scholarship from the SFI CONNECT research centre for future networks, presented his work on voice over IP quality monitoring with a presentation entitled: “The Sound of Silence: How Traditional and Deep Learning Based Voice Activity Detection Influences Speech Quality Monitoring”. In his paper he investigated how different voice activity detection strategies can influence the outputs from a speech quality prediction model. In his experiment used a dataset with labelled subjective quality judgments.

QxLab was well represented with two papers: Rahul, who is funded by a scholarship from the SFI CONNECT research centre for future networks, presented his work on voice over IP quality monitoring with a presentation entitled: “The Sound of Silence: How Traditional and Deep Learning Based Voice Activity Detection Influences Speech Quality Monitoring”. In his paper he investigated how different voice activity detection strategies can influence the outputs from a speech quality prediction model. In his experiment used a dataset with labelled subjective quality judgments.

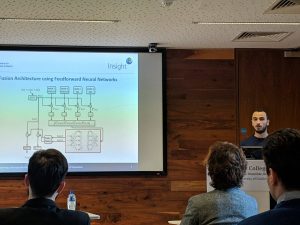

Alessandro also had a paper in the proceeding and gave an interesting talk on exploring a DNN-based fusion for speech separation. Alessandro’s PhD is funded by the SFI Insight Centre for data analytics.

Alessandro also had a paper in the proceeding and gave an interesting talk on exploring a DNN-based fusion for speech separation. Alessandro’s PhD is funded by the SFI Insight Centre for data analytics.

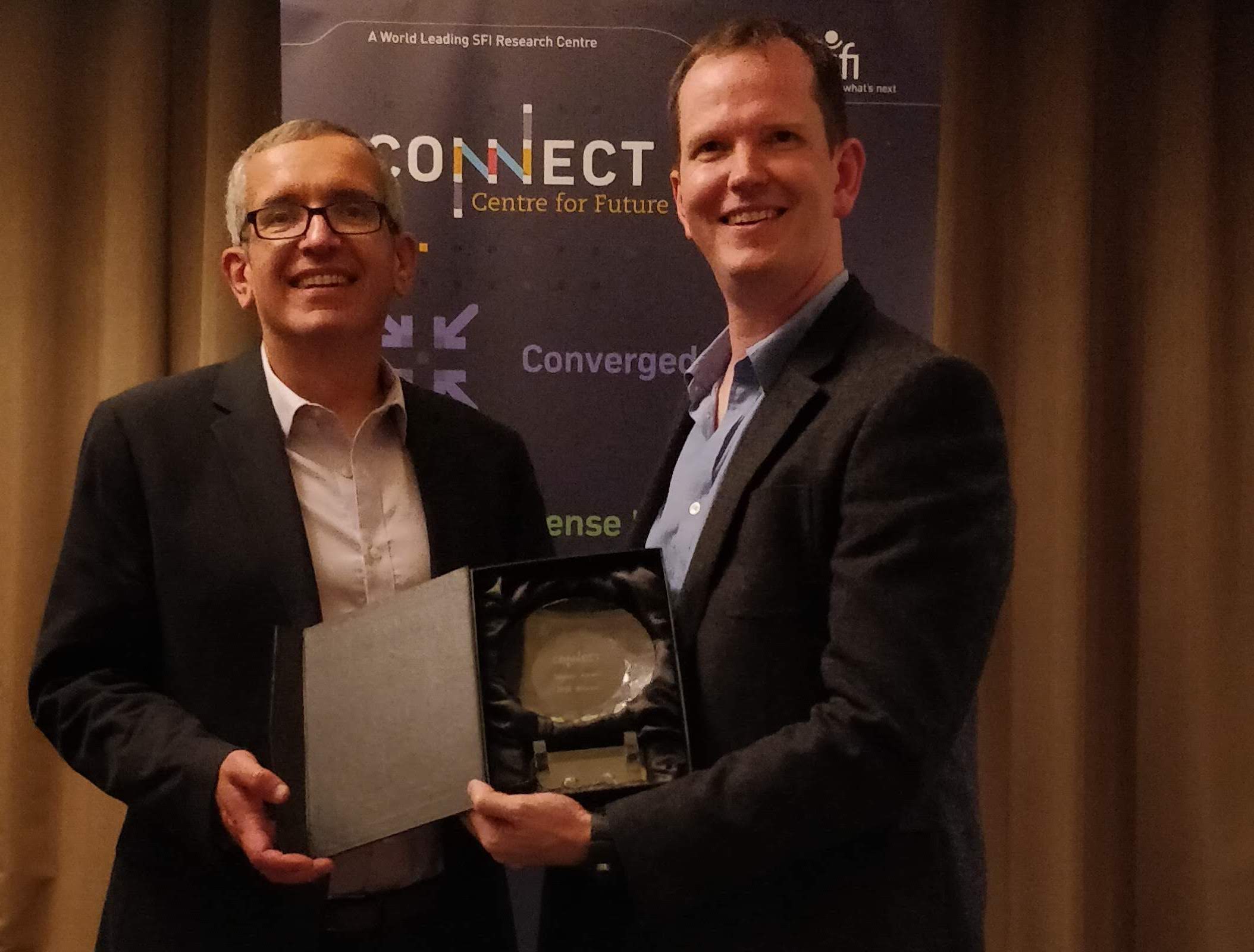

Dr Andrew Hines accepted the inaugural CONNECT Research Impact Award in recognition of a technique, developed in collaboration with Google, which is now used to assess the audio quality of YouTube’s most popular videos.

Dr Andrew Hines accepted the inaugural CONNECT Research Impact Award in recognition of a technique, developed in collaboration with Google, which is now used to assess the audio quality of YouTube’s most popular videos.